Choosing the Right AI Framework: A Practical Guide for Builders and Developers

Should I use OpenAI's Agents SDK, Claude Agent SDK, or LangChain? And what about my frontend, backend, and automation stack?

“Should I use OpenAI’s Agents SDK, Claude Agent SDK, or LangChain? And what about my frontend, backend, and automation stack?”

If you’ve asked yourself this question, you’re not alone. The explosion of AI frameworks and tools has left many builders - both traditional developers and no-code enthusiasts - struggling to pick the right stack for their projects. The wrong choice can lead to wasted time, unnecessary complexity, or compliance nightmares. But the good news is that there are clear patterns: each framework shines in particular contexts. And once you understand the “why” behind each choice, the decision becomes far less overwhelming.

This article breaks down the major players: OpenAI Agents SDK, Claude Agent SDK, LangChain/LangGraph, plus the Model Context Protocol (MCP) for data connectivity, and provide a clear framework for choosing the right tools for your project.

I have also provided a tool that makes it all easy for vibe coders, beginners and busy developers. Read to the end to get the free tool.

Read time: ~15 minutes

Understanding the Key Players (amongst others)

1. OpenAI Agents SDK

The OpenAI Agents SDK (launched March 2025) is a lightweight framework designed specifically for multi-agent coordination and handoffs.

Key Innovation: Intelligent handoffs between specialized agents - think of a triage agent that routes customer requests to billing, technical, or shipping specialists.

Core Features:

Multi-agent orchestration with handoffs

Built-in guardrails for input/output validation

Automatic session management and conversation history

Built-in tracing and observability

Provider-agnostic (works with OpenAI, Anthropic, and 100+ other LLMs)

Available in: Python and TypeScript/JavaScript (both fully supported)

Best for:

Multi-agent systems where different agents handle specialized tasks

Applications needing agent-to-agent delegation

Teams wanting rapid prototyping with safety guardrails

Projects requiring flexibility to switch LLM providers

Strengths:

Minimal abstractions make it easy to get started

Visual tracing helps debug agent execution flows

Handoffs enable clean separation of concerns

Not locked into one AI provider

Weaknesses:

Newer framework (smaller ecosystem than LangChain)

Less suited for complex, non-agent workflows

Limited tooling compared to more mature frameworks

Example use case: A customer support system where a triage agent analyzes incoming queries and intelligently hands off to specialists - billing agent handles payment issues, technical agent handles bugs, and shipping agent handles logistics - each with its own tools and expertise.

2. Claude Agent SDK

The Claude Agent SDK (released September 29, 2025, formerly Claude Code SDK) provides the same production-ready infrastructure that powers Claude Code.

Key Innovation: Battle-tested agent harness with automatic context management, built for long-running, complex tasks.

Core Features:

File operations, code execution, and web search built-in

Automatic context compaction (won’t run out of context)

Fine-grained permission controls

Subagents for specialized task delegation

Hooks for automated actions at specific points

Background tasks for long-running processes

Available in: Python and TypeScript

Best for:

Building sophisticated single-agent systems

Production agents that need to maintain context over hours

Applications requiring file system access and code execution

Teams already using Claude who want to extend its capabilities

Projects needing proven infrastructure (powers Claude Code, used by JetBrains, Cursor, etc.)

Strengths:

Production-tested infrastructure from Claude Code

Excellent automatic context management

Rich built-in tooling (file ops, code execution, web search)

Strong permission and safety controls

Integrates natively with Claude Desktop and major IDEs

Weaknesses:

Optimized for Claude models (though supports Bedrock/Vertex)

More opinionated than general orchestration frameworks

Newer SDK (ecosystem still growing)

Example use case: An internal SRE agent that diagnoses production issues by reading logs, analyzing metrics, executing diagnostic scripts, and proposing fixes— operating autonomously for hours while maintaining full context across the investigation.

3. LangChain/LangGraph

LangGraph is the production-ready orchestration framework for building stateful, multi-step agent systems with full control over workflows.

Key Innovation: Graph-based workflow orchestration with built-in state management, time-travel debugging, and human-in-the-loop patterns.

Core Features:

Low-level control over agent orchestration and control flow

Persistent state management across sessions

Human-in-the-loop with ability to pause, review, and redirect

LangGraph Platform for deployment and scaling

LangSmith for observability, tracing, and evaluation

LangGraph Studio for visual debugging

Node-level caching and deferred execution

Available in: Python and JavaScript

Best for:

Complex multi-step workflows and pipelines

Custom orchestration patterns (sequential, parallel, conditional)

Applications requiring true model portability

Projects needing human checkpoints and approvals

Advanced RAG implementations

Teams wanting maximum control and customization

Strengths:

Most mature agent orchestration framework (2+ years)

True multi-provider support (OpenAI, Anthropic, open-source)

Rich ecosystem: retrievers, evaluators, templates, integrations

Production-proven by Uber, LinkedIn, Klarna, Replit, Elastic

Excellent debugging with time-travel and visual inspection

LangGraph 1.0 launching October 2025 (actively maintained)

Weaknesses:

Steeper learning curve than simpler frameworks

More setup and complexity for straightforward use cases

Requires understanding graph-based workflow concepts

Example use case: A research-to-draft pipeline that:

(1) searches the web for sources,

(2) clusters findings by theme,

(3) queries internal databases for company data,

(4) generates section summaries,

(5) pauses for human approval,

(6) incorporates feedback,

(7) produces final report — with full state tracking and ability to rewind if needed.

4. Model Context Protocol (MCP)

MCP (released November 2024 by Anthropic) is an open protocol (not a framework) for connecting AI systems to data sources and tools. Think of it as “USB-C for AI.”

Key Innovation: Standardized interface for AI systems to access databases, APIs, and business tools without custom integrations.

Core Concept:

MCP Servers connect to specific data sources (Slack, Google Drive, databases, etc.)

MCP Clients embedded in AI applications query these servers

Standardized Interface means one integration works across multiple AI systems

Works with: Any AI system: OpenAI, Claude, Gemini, open-source models, and all the frameworks above.

Pre-built servers available for:

Google Drive, Slack, GitHub, GitLab

Postgres, MySQL, MongoDB

Stripe, Asana, Notion

File systems, web browsers (Puppeteer)

...and many more in growing ecosystem

Best for:

Securely connecting AI to internal company data

Standardizing data access across multiple AI applications

Avoiding custom integration code for each data source

Future-proofing your data connectivity layer

Official support:

Adopted by OpenAI (March 2025) - integrated into ChatGPT Desktop and Agents SDK

Native support in Claude Desktop, Claude API

Google DeepMind announced support in Gemini (April 2025)

Supported by Zed, Replit, Codeium, Sourcegraph, JetBrains

Important: MCP is a data connectivity layer that you can add to any framework - it’s not tied to Claude despite being created by Anthropic.

Example use case: A financial analysis agent that needs access to your company’s data in PostgreSQL, documents in Google Drive, and team conversations in Slack—using three MCP servers instead of building three custom integrations.

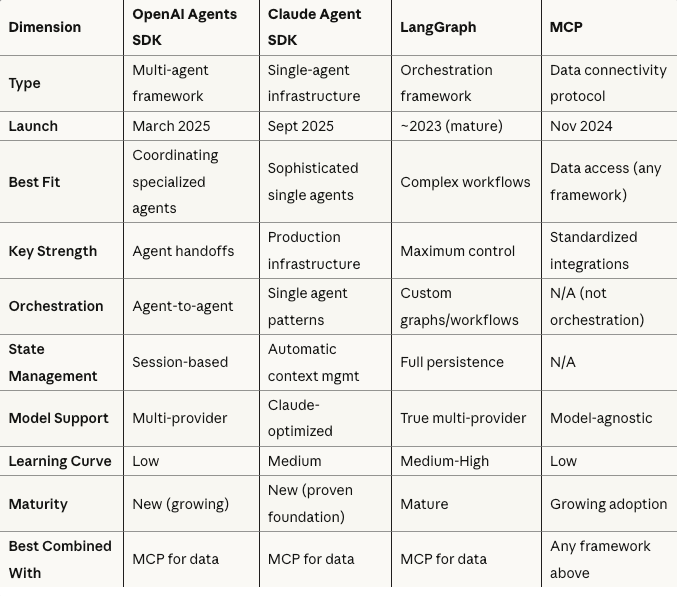

Head-to-Head Comparison

A Shortcut: Let AI Help You Decide

If this feels overwhelming, I’ve built a tool that implements this decision framework for you.

The Decision Framework: 4 Questions to Choose Your Stack

Instead of abstract dimensions, ask these four concrete questions in order:

Question 1: Do you need multiple coordinating agents, or one sophisticated agent?

Multiple agents that hand off to each other:

Customer support with specialized teams (billing, technical, shipping)

Research workflows where different agents have different expertise

Systems where task delegation happens dynamically

→ Choose: OpenAI Agents SDK

One powerful agent handling complex tasks:

Code analysis and modification

Long-running research that requires maintaining context

Production troubleshooting and diagnostics

→ Choose: Claude Agent SDK

Multi-step workflow with conditional logic:

Data processing pipelines with decision points

Content generation with human approval gates

Complex integrations between multiple systems

→ Choose: LangGraph

Question 2: Do you need to connect to external data sources?

No external data needed:

Use any framework with built-in tools

Keep it simple—vanilla API calls might even suffice

Yes, need to access company data:

→ Add MCP servers (works with any framework above)

Available pre-built: Google Drive, Slack, GitHub, Postgres, etc.

Benefit: One standard integration instead of custom code

Yes, with complex querying/retrieval:

→ LangGraph + MCP for advanced RAG patterns

Benefit: Full control over retrieval logic and multi-hop reasoning

Question 3: How important is model portability?

Committed to one AI provider:

Fine to use provider-optimized tools

Less complexity, better integration

→ OpenAI SDK (if OpenAI) or Claude Agent SDK (if Claude)

Want flexibility to switch providers:

Insurance against pricing changes or capability shifts

Ability to use different models for different tasks

→ OpenAI Agents SDK (100+ providers) or LangGraph (fully portable)

Need to compare models in production:

Run experiments with different providers

A/B test model performance

→ LangGraph (easiest to swap models)

Question 4: What’s your timeline and team experience?

Ship in days / team new to agents:

Need quick wins and simple patterns

Minimal learning curve acceptable

→ OpenAI Agents SDK

Ship in 1-2 weeks / team comfortable with Claude:

Building production-grade single agent

Want proven, battle-tested infrastructure

→ Claude Agent SDK

Ship in 1-3 months / complex requirements:

Need custom orchestration patterns

Team can invest in learning advanced concepts

Requirements justify the complexity

→ LangGraph

Need immediate data access regardless of framework:

→ Start with MCP (works with all above)

Decision Matrix: Quick Reference

Choose OpenAI Agents SDK if:

✅ You need multi-agent coordination with intelligent handoffs

✅ You want to prototype and ship quickly

✅ Provider flexibility matters (want to switch models easily)

✅ Your team prefers minimal abstractions

✅ You’re building agent-based routing/delegation systems

Choose Claude Agent SDK if:

✅ You’re building one sophisticated agent (not multiple)

✅ Tasks are long-running and complex (hours, not minutes)

✅ You need production-grade context management

✅ File system access and code execution are important

✅ You want infrastructure proven by Claude Code

Choose LangGraph if:

✅ You need complex orchestration with custom control flows

✅ Human-in-the-loop with state management is critical

✅ Model portability is a top priority

✅ You require advanced debugging and observability

✅ Your team can invest in the learning curve

✅ Maximum control justifies added complexity

Add MCP (with any framework) if:

✅ You need to connect to internal company data

✅ You want standardized integrations (not custom code)

✅ You’re accessing databases, file systems, or business tools

✅ Future-proofing data connectivity matters

Real-World Hybrid Approaches

Most production systems combine multiple tools. Here are proven patterns:

Pattern 1: LangGraph + MCP

Use case: Complex research pipeline with data access

LangGraph orchestrates the multi-step workflow

MCP provides access to internal documents and databases

Result: Custom workflow logic + standardized data connectivity

Pattern 2: OpenAI Agents SDK + MCP

Use case: Multi-agent customer support

OpenAI SDK handles agent coordination and handoffs

MCP connects to Zendesk, internal CRM, and knowledge base

Result: Specialized agents with rich data access

Pattern 3: Claude Agent SDK + MCP

Use case: Internal code assistant

Claude Agent SDK provides the sophisticated agent infrastructure

MCP connects to GitHub, Jira, and documentation systems

Result: Production-grade agent with company context

Pattern 4: Mix and Match

Use case: Enterprise AI platform

LangGraph orchestrates overall workflow

OpenAI Agents SDK handles customer-facing support sub-system

Claude Agent SDK powers internal development tools

MCP provides unified data access across all systems

Result: Best tool for each job

Key insight: These tools are complementary, not mutually exclusive. Choose based on each subsystem’s needs.

Beyond the Frameworks: Choosing the Rest of Your Stack

Frontend

Next.js → SSR, dashboards, complex auth, SEO-critical sites

React + Vite → Simple SPAs, internal tools, fast dev cycles

SvelteKit → Lightweight apps, excellent performance, smaller bundle sizes

Streamlit → Quick AI demos, data science tools (Python-native)

Backend

FastAPI → Python teams, async workloads, ML-adjacent, excellent for streaming LLM responses

Node/Express → JS/TS teams, rapid prototyping, simple APIs

NestJS → Enterprise TypeScript, structured architecture, microservices

Django → CRUD-heavy apps, admin panels, mature ecosystem

Database & Vector Search

PostgreSQL (via Neon/Supabase/Railway) → Default choice for OLTP

pgvector → Vector search in Postgres (fewer moving parts)

Pinecone → Managed vector DB, excellent for RAG

Qdrant → Self-hosted vector DB alternative

Redis → Caching, session storage

S3/Cloudflare R2 → File storage

Workflow Automation

GitHub Actions / Vercel Cron → Simple scheduled jobs

Temporal → Long-running, retryable workflows (pairs well with OpenAI Agents SDK)

Airflow/Prefect → Data pipelines, ETL jobs

n8n (self-hosted) / Zapier/Make → No-code automation, backoffice workflows

Observability & Monitoring

LangSmith → LLM-specific tracing, evaluation, and monitoring

Sentry → Error tracking and performance monitoring

OpenTelemetry → Distributed tracing across services

Logfire → Python-native observability (Pydantic team)

Datadog/New Relic → Enterprise monitoring

Deployment

Vercel → Next.js, serverless, excellent DX

Railway/Render → Full-stack apps, databases included

Fly.io → Global edge deployment

AWS/GCP/Azure → Enterprise deployments with compliance needs

Cloudflare Workers → Edge functions, ultra-low latency

Example Use Cases with Full Stack Recommendations

1. Customer Support Bot (Multi-Agent)

Requirement: Handle billing, technical, and shipping inquiries with specialized knowledge per domain.

Stack:

Agent Framework: OpenAI Agents SDK (handoffs between specialists)

Data Access: MCP → Zendesk, internal CRM, Stripe

Frontend: Next.js (customer portal) + React

Backend: Node.js/Express

Database: PostgreSQL (Supabase) + Redis (cache)

Deployment: Vercel

Why: Need quick handoffs between specialized agents. OpenAI SDK’s handoff patterns are perfect for this. MCP provides standardized access to support systems.

Timeline: 2-3 weeks

2. Internal Code Assistant (Single Sophisticated Agent)

Requirement: Analyze codebases, suggest improvements, generate documentation, maintain context across hours-long sessions.

Stack:

Agent Framework: Claude Agent SDK (production infrastructure)

Data Access: MCP → GitHub, Jira, Confluence

IDE Integration: Native (Claude Desktop / JetBrains)

Backend: Python/FastAPI

Database: PostgreSQL (code analysis cache)

Deployment: Self-hosted / Railway

Why: Need sophisticated single agent with long-running context. Claude Agent SDK’s proven infrastructure (powers Claude Code) is ideal. MCP connects to development tools.

Timeline: 3-4 weeks

3. Research-to-Report Pipeline (Complex Workflow)

Requirement: Search web → cluster findings → query internal data → generate sections → get human approval → produce final report.

Stack:

Agent Framework: LangGraph (custom workflow orchestration)

Data Access: MCP → Google Drive, PostgreSQL, Slack

Frontend: Streamlit (internal tool)

Backend: Python/FastAPI

Database: PostgreSQL + pgvector (RAG)

Vector DB: pgvector (embedded in Postgres)

Observability: LangSmith

Deployment: Railway / Fly.io

Why: Complex multi-step workflow with conditional logic and human checkpoints. LangGraph’s state management and human-in-the-loop patterns are essential. MCP provides data access.

Timeline: 6-8 weeks

4. Financial Compliance Agent

Requirement: Monitor transactions, flag suspicious activity, generate compliance reports, require approval for alerts.

Stack:

Agent Framework: Claude Agent SDK (single sophisticated agent)

Data Access: MCP → Internal database, document storage

Backend: Python/FastAPI

Database: PostgreSQL + audit logs

Workflow: Temporal (for durable execution)

Monitoring: LangSmith + Datadog

Deployment: AWS (compliance requirements)

Why: Needs sophisticated analysis with strict permissions. Claude Agent SDK’s permission controls + MCP’s secure data access provide necessary governance.

Timeline: 8-12 weeks (includes compliance review)

5. Content Generation Pipeline (Team Collaboration)

Requirement: Generate blog posts, get editor approval, schedule publishing, track performance.

Stack:

Agent Framework: OpenAI Agents SDK (writer + editor + SEO agents)

Data Access: MCP → WordPress/CMS, Google Analytics

Frontend: Next.js (content dashboard)

Backend: Node.js/NestJS

Database: PostgreSQL + Redis

Scheduling: Vercel Cron

Deployment: Vercel

Why: Multiple specialized agents (writing, editing, SEO) coordinating through handoffs. OpenAI SDK’s multi-agent patterns fit naturally.

Timeline: 3-4 weeks

6. Multi-Tenant SaaS Platform (Scaling)

Requirement: Serve multiple customers with isolated data, custom agent configurations per tenant.

Stack:

Agent Framework: LangGraph (maximum control + customization per tenant)

Data Access: MCP → Per-tenant databases

Frontend: Next.js + React

Backend: NestJS (TypeScript)

Database: PostgreSQL (multi-tenant schema)

Caching: Redis

Observability: LangSmith + OpenTelemetry

Deployment: AWS / GCP (enterprise)

Why: Need full control over agent behavior and data isolation per tenant. LangGraph’s flexibility allows custom configurations.

Timeline: 12-16 weeks

When You DON’T Need a Framework

Not every project requires an agent framework. Use vanilla API calls when:

✅ Single LLM call with simple tool use

✅ No multi-step reasoning required

✅ No state management needed

✅ Quick prototype or proof-of-concept

✅ Budget is extremely tight

Example: A simple “summarize this document” feature doesn’t need LangGraph. Just call the OpenAI or Claude API directly.

Rule of thumb: If your agent doesn’t need to decide what to do next based on results, you probably don’t need a framework.

Applying This Framework to Your Project

Before building, answer these questions:

Architecture Questions:

Do I need multiple agents coordinating, or one sophisticated agent?

How many steps are in my workflow? (1-2 = simple, 3-5 = medium, 6+ = complex)

Do I need human approval at any point?

Must the agent maintain context across long sessions (hours)?

Data Questions:

5. What external data sources do I need to access?

6. Can I use pre-built MCP servers, or need custom integrations?

7. How sensitive is my data? (affects permission/security requirements)

Practical Questions:

8. Can I commit to one AI provider, or need flexibility?

9. What’s my timeline? (days/weeks/months)

10. What’s my team’s experience level with agent frameworks?

Based on your answers:

Mostly 1-2 step workflows, quick timeline, new team → OpenAI Agents SDK

One agent, long-running, proven infrastructure needed → Claude Agent SDK

Complex workflows, human-in-loop, maximum control → LangGraph

Any external data access → Add MCP

A Shortcut: Let AI Help You Decide

If this feels overwhelming, I’ve built a tool that implements this decision framework for you.

It will:

Ask about your specific project requirements

Apply this decision framework automatically

Explain the “why” behind each recommendation

Provide a complete stack suggestion (framework + supporting tools)

Adapt explanations based on your experience level

Cross-check recommendations against official documentation

How to use:

Describe your project idea

Answer a few clarifying questions

Get a detailed stack recommendation with rationale

Ask follow-up questions to refine

It’s free to use and based on the framework in this article.

Key Takeaways

There’s no single “best” AI framework. The right choice depends on your specific needs:

OpenAI Agents SDK → Fast multi-agent coordination with handoffs

Claude Agent SDK → Production-grade infrastructure for sophisticated single agents

LangGraph → Maximum control for complex, custom workflows

MCP → Standardized data connectivity (works with all frameworks)

The winning approach:

Start with the 4-question decision framework

Choose your agent framework based on orchestration needs

Add MCP for data connectivity (if needed)

Select supporting stack based on team expertise and requirements

Remember: you can combine tools (most production systems do)

Most important: Understanding why a tool fits your context matters more than following trends or using the “newest” framework.

What’s Next?

Have a project in mind? Use the Stack & Agent Advisor GPT to get a personalized recommendation.

Subscribe to stay updated as these frameworks evolve (all are actively developed in 2025).

Join the discussion: What are you building? Which framework did you choose and why? Drop a comment below.

Last updated: October 1, 2025

Note: The AI framework landscape evolves rapidly. Always check official documentation for the latest features and best practices:

Ready to build? The right stack is waiting for you.